Are Machine Interpreting Tools Any Good? We Tested Four.

Ever wonder how machine interpreting tools actually perform?

I teamed up with my friend Nora Díaz to test four of them.

Our conclusion? They're no match for human interpreters, at least not yet.

To understand why, it helps to know what machine interpreting is.

What is machine interpreting?

Tools like Boostlingo, Kudo and Wordly all offer “automatic speech translation” solutions, which aim to convert spoken speech in one language into AI-generated speech in another. Google Meet and ChatGPT also offer similar features.

Most machine interpreting is a three-step process:

Step 1. Automatic speech recognition (software converts speech into text).

Step 2. Machine translation (software translates the text).

Step 3. Text-to-speech (AI voices read the translation aloud).

Each step builds on the previous one, so errors cascade through the process – starting with speech recognition.

If you’ve ever enabled Closed Captions on Zoom (or even your TV), you know that automatic speech recognition works, but only to a point. It often mis-transcribes names and specialized terminology, struggles with sentence breaks and punctuation, and lags behind the speaker – not exactly a solid foundation.

Even with a decent script, machine translation still struggles with nuance or cultural references, so translations are literal at best and nonsensical at worst.

Finally, text-to-speech adds another layer of problems. AI can't convey speakers' emotions – or even recognize when one sentence ends and another begins. Even when the message is delivered completely and accurately, these issues make it hard to understand and follow what's being said.

Adding to these issues, research shows that unnatural speech causes listening fatigue. Most people can't listen to flat, monotone, and poorly paced speech for more than a few minutes.

While AI voices can emulate human speech when reading entire documents, they turn robotic when delivering short fragments in real time, making text-to-speech less suitable for live events.

What we tested and how

To see how this played out in practice, we tested two dedicated machine interpreting tools (Boostlingo and Wordly) and two general-purpose apps with built-in machine interpreting features (Google Meet and ChatGPT).

We ran two types of tests. First, we had Boostlingo and Wordly interpret the same four video talks. We chose presentations that posed different challenges: a French speaker with a heavy accent, a Spanish panel discussion with multiple speakers, a fast-paced Italian presentation with slides, and a rapid-fire TED Talk in Mandarin.

Next, we had Google Meet Speech Translation and ChatGPT Advanced Voice Mode interpret a conversation between us in real-time.

(You can listen to the demos from these tests in our free Machine Interpreting Showdown.)

What we found

Test one: Wordly and Boostlingo

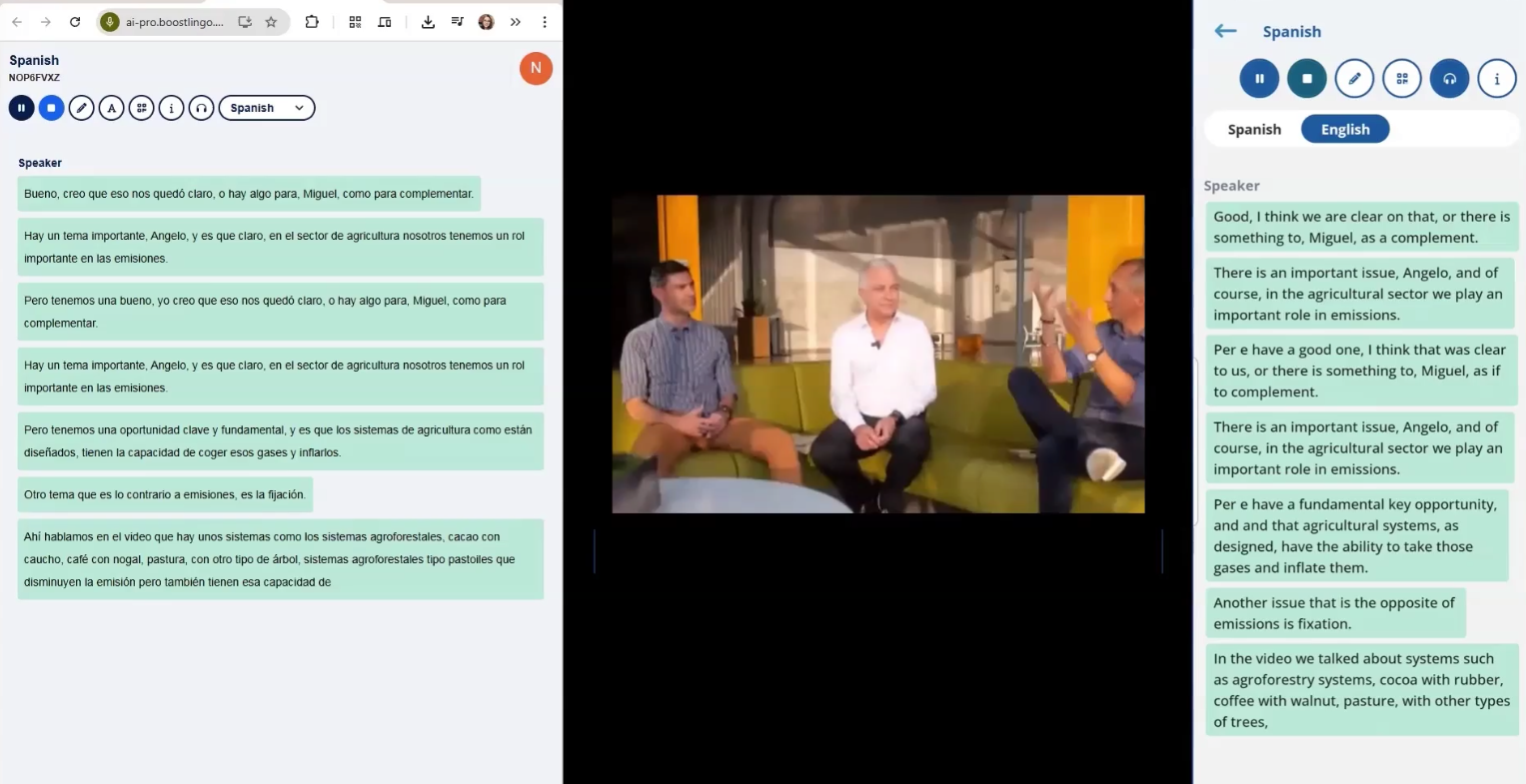

Boostlingo struggled to differentiate between speakers during a Spanish panel

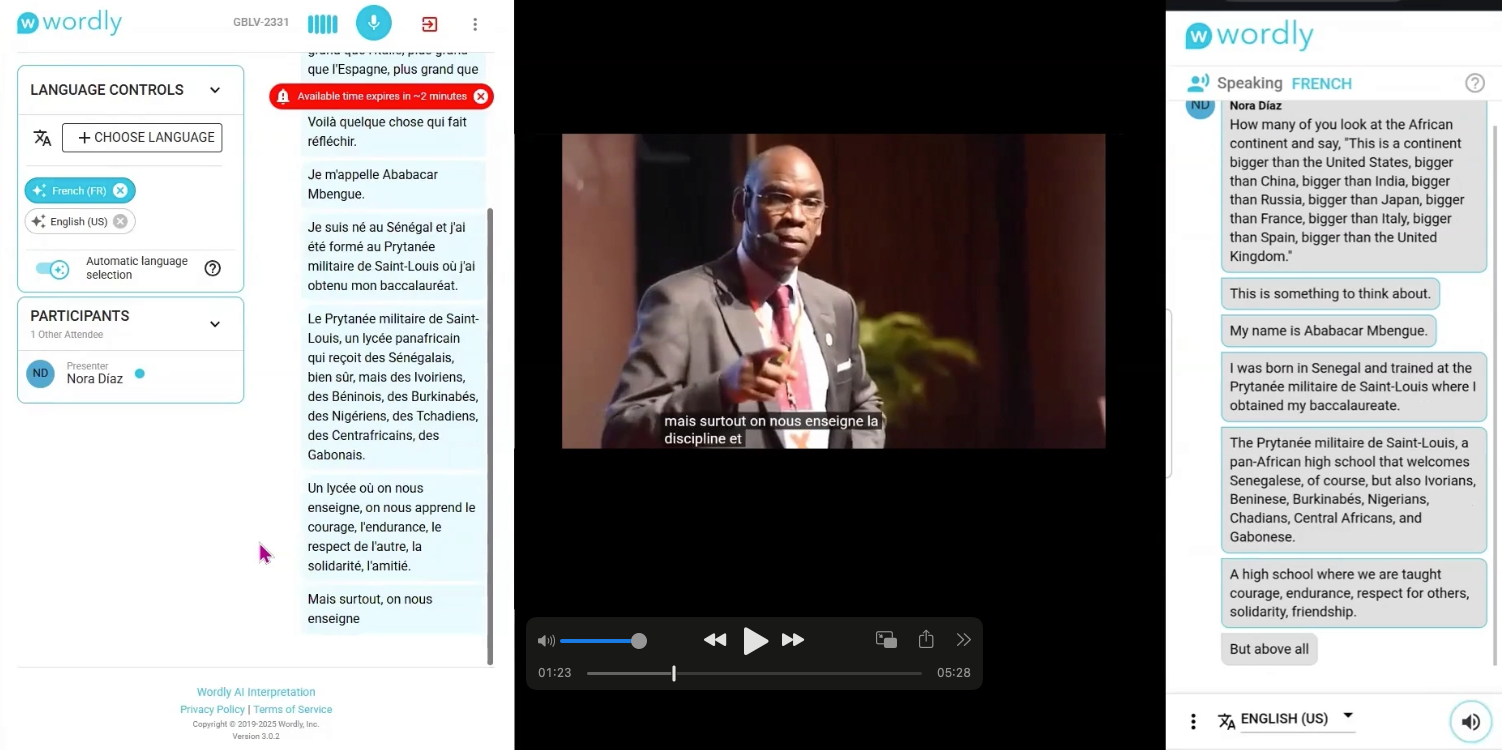

Wordly and Boostlingo’s robotic delivery and weird pacing made their interpretation hard to follow and sometimes impossible to understand, even when the transcription and translation were acceptable (which wasn’t always the case).

The lag times were painful – Boostlingo took up to 42 seconds to get started, while Wordly sometimes paused mid-presentation, then interpreted at 2x speed to make up for lost time.

Both delivered the content of individual presentations to a reasonable standard, but struggled when multiple speakers and casual speech were involved. Although Wordly handled this configuration better, something still got lost in translation.

Pacing was a challenge, particularly for Boostlingo, which struggled with sentence breaks. (“He fell in love with a woman” became “He fell. In love. With a woman.”). Meanwhile, Wordly’s rushed delivery (to compensate for lag time) was exhausting to listen to. And the lag in both tools meant we often heard the interpretation after the speaker had already moved on to a different slide.

Wordly paused 42 seconds before interpreting the first sentence of a speech in Senegalese French

Between the two, Wordly was the winner – with better intonation, clearer sentence breaks, and more complete interpretations. But that's not saying much. It's hard to imagine anyone sitting through more than a few minutes of interpreting from either tool. We'd only recommend them as a last resort, and even then, you'd be better off sticking with the written transcription/translation and skipping the tortuous text-to-speech entirely.

Test two: Google Meet and ChatGPT

Google Meet’s Speech Translation works by cloning a speaker’s voice, so they actually sound like they’re speaking in another language. At the moment, it is onlyavailable between English and French, German, Italian, Portuguese or Spanish with a paid Google AI Pro subscription, and in limited regions.

It made simultaneous conversation possible, despite occasional mistakes that required clarification or confused the listeners. For example, it changed Nora’s gender and mistranslated her request for a pay raise (a “bonus”) as asking for a “bond.”

As a plus, it mimicked Nora’s voice and intonation decently well, although her accent in English varied over the course of our 7-minute conversation, switching from Mexican-inflected to North American to Indian English.

Activating the “Speech translation” feature in Google Meet.

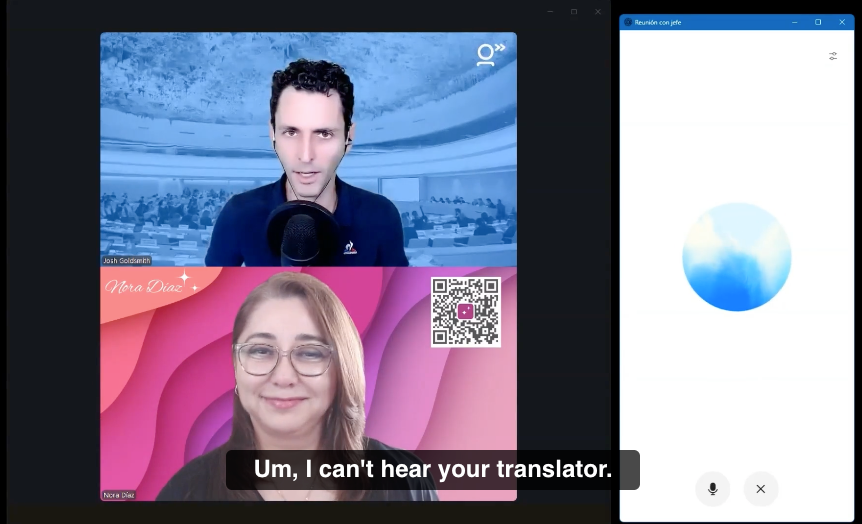

Meanwhile, ChatGPT’s Advanced Voice Mode only allowed for consecutive interpreting. To avoid confusion, we had to make a conscious effort to take turns speaking and wait for interpreting to begin, making our meeting drag on and feel choppy.

Setting up voice mode for a remote meeting wasn’t easy, and we encountered occasional tech hiccups. For example, ChatGPT occasionally “forgot” to interpret and, when pressed, blurted out a translation in the wrong language. Still, it got the job done – just not smoothly.

Struggling to hear the interpretation from ChatGPT's Advanced Speech Mode during a remote meeting on Zoom

Unlike Wordly and Boostlingo, Google Meet and ChatGPT’s interpreting was less demanding on our brains and ears: intonation, pacing, sentence breaks were all superior. Still, with the occasional hiccups, awkward pauses, mistranslations – and in the case of ChatGPT, extended waits – our conversation wasn’t exactly natural.

Whereas Google’s interpreting feature works for online meetings since it allows simultaneous conversation and is embedded in Google Meet, ChatGPT’s Advanced Voice Mode works best for in-person situations, where two people can take turns speaking using their mobile phones.

Conclusion

Our tests confirmed what we already suspected: machine interpreting, at least for now, is more of a quick fix than a solid plan.

It could work for short-form, scripted, informational content, or brief, low-stakes conversations when human interpreting isn’t an option. But it’s not ready to take on anything that’s unscripted, nuanced, or high-stakes.

That said, AI is moving fast. Staying up to date is key to remaining relevant and helping our clients know when machine interpreting works – and when a human interpreter is still the safest bet. (Spoiler: that’s most of the time.)